For a given number n we need to find the numerically larger number that is a palindrome. The simple approach which is not good for large numbers is to check if the number is palindrome and increment by 1 till we find the next number which is a palindrome.

A smarter algorithm is as follows :

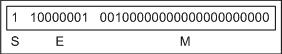

1. Split the string into the left and right halfs

2. Compare the last digit in the left half and the first digit in the right half.

a. If right is greater than the left, increment the left and stop

b. If right is less than the left, stop

c. If right is equal to the left, then continue the step 3 with the next the previous digit and so on

3. Take the left half and append the reverse to it.

Now complications may arise when the number is odd length and when the new palindrome is of greater length than the current number.So for this we check if our new palindrome is less than the given number. If so than we start increasing our middle digit by 1 and mirroring it to the other digits. If the middle digit is a 9 than we need to change it to zero and update the next digit by 1 or make it zero if it is a 9 until we reach a number that is not 9. Else we need to add 1 to the start of the number.The C code for the procedure is given below:

A smarter algorithm is as follows :

1. Split the string into the left and right halfs

2. Compare the last digit in the left half and the first digit in the right half.

a. If right is greater than the left, increment the left and stop

b. If right is less than the left, stop

c. If right is equal to the left, then continue the step 3 with the next the previous digit and so on

3. Take the left half and append the reverse to it.

Now complications may arise when the number is odd length and when the new palindrome is of greater length than the current number.So for this we check if our new palindrome is less than the given number. If so than we start increasing our middle digit by 1 and mirroring it to the other digits. If the middle digit is a 9 than we need to change it to zero and update the next digit by 1 or make it zero if it is a 9 until we reach a number that is not 9. Else we need to add 1 to the start of the number.The C code for the procedure is given below:

cin >> str;

s = str;

int inc,dec;

inc = s.size()/2;

dec = inc;

if(s.size()%2==0)

dec--;

for(int i=inc,j=dec;i<s.size() && j>=0;i++,j--)

s[i] = s[j];

while(s<=str && s.size()<=str.size()){

int i=dec,j=inc;

while((s[i]-'0')==9 && i>=0 && j<=s.size()){

s[i] = s[j] = '0';

i--;

j++;

}

if(i<0){

s = '1' + s;

int l = s[s.size()-1] - '0';

l++;

s[s.size()-1] = (l+'0');

}else{

int l = s[i] - '0';

l++;

s[i]=s[j]=(l+'0');

}

}

cout << s << endl;